In this article, you will be learning about fetching data from an API using Python. We will be fetching Air Pollution Data through the API provided by the Indian government on data.gov.in

What is API?

API is the acronym for Application Programming Interface, which is a software intermediary that allows two applications to talk to each other. Each time you use an app like Facebook, send an instant message or check the weather on your phone, you’re using an API.

The platform data.gov.in provides us with the summarised yearly Air Pollution Data for different cities, but if you want detailed data, they have provided an API for the same, which collects data across 1240(approx) stations in India on an hourly basis which can be fetched through the API.

Pre-requisites:

If you want to follow along with this tutorial, you should have the following setup in your system:

1. Python 3 installed.

2. Jupyter Lab installed (I will be using that, not mandatory)

3. An account on Data.gov.in (in order to get your own key for API data fetching)

Let’s Get Started with our code:

#Import all required packages import requests import pandas as pd import io

So, we start by importing all our required packages for the code. I will be explaining the use of each package and why we have imported that along with the code.

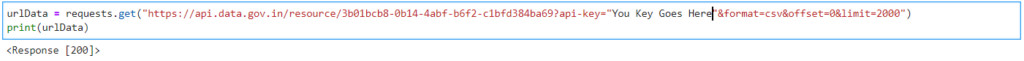

urlData = requests.get("https://api.data.gov.in/resource/3b01bcb8-0b14-4abf-b6f2-c1bfd384ba69?api-key="Your Key Goes Here"&format=csv&offset=0&limit=2000")

We create a new variable “urlData”, where we fetch the data from the API using the get() from the Requests Python package.

You get an option to chose the format of the data in which you want the data. There are 3 options to chose from:

1. XML

2. JSON

3. CSV

You get this option when you create the API link on data.gov.in. You also get an option to chose the limit to the data which is fetched when you call the API, which in my case has been set to 2000 (since there re approx. 1240 stations around India from where we get the data, so basically on every call we request 1240 rows of data).

If we run this code, here is the output:

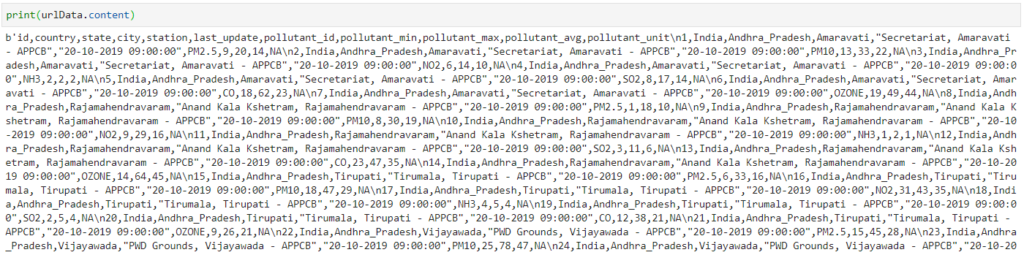

As you can see in the output we only get <Response [200]>, which basically means the request has succeded and the API has fetched the data. But in order to see the content what we have fetched we will use .content with urlData, here is the output for the same:

The output continues… till it prints 1240 rows of data that we have fetched. Now obviously we want this to be parsed in a more readable format, so here we will use the Python Pandas package to convert this CSV to a data frame.

urlData = requests.get("https://api.data.gov.in/resource/3b01bcb8-0b14-4abf-b6f2-c1bfd384ba69?api-key="Your Key"&format=csv&offset=0&limit=2000").content

#Converting the data to pandas dataframe.

rawData = pd.read_csv(io.StringIO(urlData.decode('utf-8')))

rawData.head()

You can fetch the data in any format and use the appropriate parser to parse the data. If you plan on fetching the data on regular basis and append the data every hour, you can either create a database and host it somewhere, connect it to python and append your data there or if you do not want to host a database you can also store your data in google sheets using pygsheets package in python.

Here is the link to both the articles:

Tutorial: How to Read and Write data from Python to Google Sheets

Tutorial: How to Read and Write data from Python to MySQL DB